The Pursuit of Artificial Common Sense: Understanding AI's Limits

Written on

The Quest for Artificial Common Sense

On July 19th, a blog post titled “Feeling unproductive? Maybe you should stop overthinking.” was published online. This 1000-word self-help piece discusses how overthinking hampers our creativity, suggesting that we should focus more on the present moment:

“In order to accomplish tasks, it may be beneficial to reduce our thinking. This might seem counter-intuitive, but sometimes our thoughts obstruct the creative process. We often perform better when we momentarily ‘tune out’ external distractions and concentrate on what is immediately in front of us.”

This article was generated by GPT-3, OpenAI's extensive 175-billion parameter neural network, which was trained on nearly half a trillion words. UC Berkeley student Liam Porr merely crafted the title and allowed the algorithm to generate the content. This “fun experiment” was intended to assess whether the AI could deceive readers into believing it was human-written. GPT-3 indeed struck a chord, as the post quickly ascended to the top of Hacker News.

However, a paradox arises with contemporary AI systems. While some of GPT-3’s outputs arguably pass the Turing test—convincing people of their human authorship—it often fails at the most basic tasks. AI researcher Gary Marcus once asked GPT-2, the predecessor to GPT-3, to finish this sentence:

“What happens when you stack kindling and logs in a fireplace and then drop some matches is that you typically start a …”

The expected answer would be “Fire,” a response that would come to mind for any child. Instead, GPT-2 responded with “ick.”

Thus, the experiment fell short.

Human communication is an optimization endeavor, where the speaker minimizes their words to convey thoughts to the listener. We refer to this unspoken knowledge as common sense. Since the listener shares access to the same common sense as the speaker, they can decode the utterance back into its intended meaning.

By its nature, common sense isn’t documented. As NLU expert Walid Saba highlights, we don’t say:

“Charles, who is a living young human adult, and who was in graduate school, quit graduate school to join a software company that had a need for a new employee.”

Instead, we simply say:

“Charles quit graduate school to join a software company.”

We assume our conversational partner understands the underlying common sense rules: one must be alive to attend school or join a company, one must be in graduate school to leave it, and a company must have a vacancy to fill. Thus, every human utterance is filled with implicit common sense that remains unstated. Common sense can be likened to dark matter in physics: it is invisible yet constitutes a significant portion of our communication.

How Can We Teach Machines Artificial Common Sense?

The question arises: how can we instill machines with artificial common sense? How do we convey that we drop a match to ignite a fire?

A Brute-Force Strategy

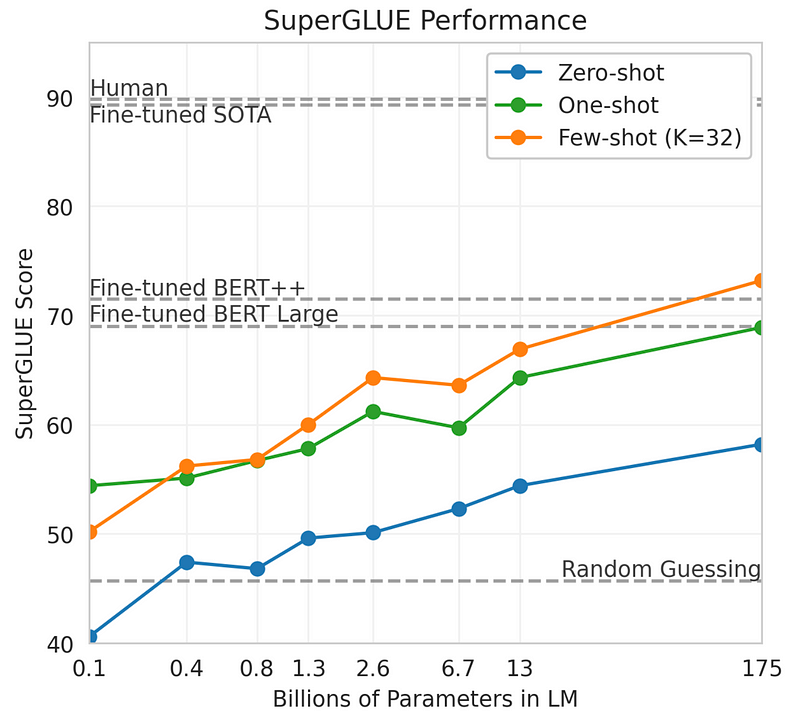

There’s a belief that we can achieve artificial common sense through brute force, as suggested by the slope of the curve in the following plot:

This graph, derived from the GPT-3 research paper ‘Language models are few-shot learners,’ illustrates the model's performance on the SuperGLUE benchmark relative to the number of parameters. SuperGLUE presents demanding semantic tasks, such as Winograd schemas:

“The trophy doesn’t fit into the brown suitcase because it’s too small. What is too small?”

The answer being “The suitcase.”

Remarkably, even at 175 billion parameters, the curve indicates room for improvement—suggesting that larger models with trillions of parameters could eventually yield artificial common sense. This is known as the ‘brute-force’ hypothesis. However, it remains unproven whether this hypothesis holds true. A counter-argument posits that a machine cannot learn from what isn’t explicitly documented.

Endless Rules and Their Complexity

If our goal is to teach machines common sense, why not simply codify all rules and provide them to the machine? This was the vision Douglas Lenat pursued in the 1980s when he assembled computer scientists and philosophers to create a common sense knowledge base known as Cyc. Presently, Cyc encompasses 15 million rules, such as:

- A bat has wings.

- Because it has wings, a bat can fly.

- Because a bat can fly, it can travel from place to place.

However, the challenge with a hard-coded system like Cyc is the infinite nature of rules: for each rule, exceptions arise. The complexity grows exponentially, complicating human maintenance and overwhelming machine memory capabilities.

Consequently, it may not be shocking that, despite decades of effort, Cyc has not yet succeeded and may never do so. University of Washington professor Pedro Domingos labeled Cyc as “the biggest failure in the history of AI.” Oren Etzioni, CEO of the Allen Institute for Artificial Intelligence, echoed this sentiment, asserting, “If it worked, people would know that it worked,” in a Wired interview.

A Hybrid Approach to Artificial Common Sense

So, how do we achieve true AI that incorporates artificial common sense? There are valid arguments against both brute-force models and hard-coded rules. What if we could merge the strengths of both?

Enter COMET, a transformer-based model developed by Yejin Choi and her team at the University of Washington. The essence of COMET is its training on a ‘seed’ common sense knowledge base, which it then expands by generating new rules from every input it receives. COMET effectively serves as a means to ‘bootstrap’ artificial common sense. In their research, the authors assert that COMET “frequently produces novel commonsense knowledge that human evaluators deem to be correct.”

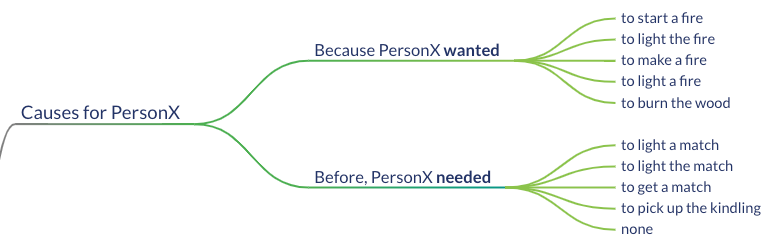

As a test, I entered “Gary stacks kindling and logs in a fireplace and then drops some matches” into the COMET API, and here’s part of its output graph:

COMET accurately infers that Gary intended to light a fire and concludes that “as a result, Gary feels warm.” This response is notably superior to GPT-3’s answer to the same query.

So, could COMET be the key to solving the artificial common sense conundrum? Time will tell. If COMET achieves human-level performance on the SuperGLUE benchmark, it would represent a significant advancement in the field.

However, skeptics might argue that artificial benchmarks cannot truly measure common sense. There is a concern that once a benchmark is established, researchers might start to overfit their models, rendering results meaningless. An alternative perspective suggests employing the same intelligence tests used for humans. For example, researchers Stellan Ohlsson and colleagues at the University of Illinois propose assessing artificial common sense using IQ tests designed for young children.

Caution: Don't Let Today's AI Deceive You

Finally, remain wary of today’s AI capabilities. Reflecting on GPT-3’s article, it becomes apparent that many of its statements are superficial and generic, akin to the advice found in fortune cookies:

“The important thing is to keep an open mind and try new things.”

This superficiality may explain why it can mislead so many individuals. Shallow insights often mask a lack of genuine understanding.

Further Exploration of Artificial Intelligence

Douglas Lenat: Cyc and the Quest to Solve Common Sense Reasoning in AI | Lex Fridman Podcast #221 - YouTube

This video explores Douglas Lenat's efforts with Cyc and his insights on common sense reasoning in AI.

Mindscape 184 | Gary Marcus on Artificial Intelligence and Common Sense - YouTube

In this video, Gary Marcus discusses the intersection of AI and common sense, providing valuable perspectives on the subject.