Meta's Code LLaMa: A Transformative Leap in Coding AI

Written on

Chapter 1: A New Era in Coding AI

In light of recent reports from Stanford and Berkeley highlighting the declining accuracy of GPT-4 in coding tasks, Meta has unveiled Code LLaMa, a suite of large language models (LLMs) tailored for code completion. Beyond their remarkable performance, these models come equipped with a range of features that are set to make Code LLaMa the go-to choice for developers. Best of all, they are available at no cost for both research and commercial use—a true blessing from the AI realm.

If you wish to stay informed about the rapidly evolving AI landscape and feel empowered to act, consider subscribing to my weekly newsletter, which delivers exclusive content not found on other platforms.

TheTechOasis

The newsletter to stay ahead of the curve in AI

thetechoasis.beehiiv.com

Open Source: The Future of AI

Since entering the generative AI space, Meta has taken a distinct path compared to other tech giants. Rather than investing heavily in proprietary models like Microsoft did with OpenAI, Meta has embraced an open-source philosophy. Yann LeCun, Meta's Chief AI Scientist, remains optimistic about the potential of open-source solutions to outpace proprietary offerings like ChatGPT. His belief is that vital technologies should be accessible to all.

This strategy aims to prevent any single corporation from monopolizing AI advancements. For instance, if a closed-source model is launched by a competitor, Meta can counter with an open-source alternative that offers similar capabilities, effectively neutralizing the competitive advantage.

Recently, with the launch of LLaMa 2, Meta has further refined its approach by introducing nine new LLMs specifically designed for coding tasks.

The video titled "Why Meta Open Sourced their best LLM but Google didn't" delves into the strategic decisions behind Meta's open-source approach, contrasting it with Google's proprietary model.

The Power of Multitasking Models

Meta has rolled out nine new LLMs designed specifically for coding. A standout feature of these Code LLaMa models is their ability to multitask effectively. They have been trained for two key functions:

- Code Completion: Similar to existing code-based LLMs, these models predict high-quality code in an autoregressive manner.

- Code Infilling: This capability allows them to fill in missing sections of code based on the surrounding context, enhancing their utility for programmers.

The significance of this multitasking ability is underscored by their advances in long-sequence modeling.

Long-Context Modeling with RoPE

Transformers have transformed the AI landscape, yet they face challenges with longer sequences. The introduction of Rotary Positional Embeddings (RoPE) has offered a promising solution. This method enhances the model's understanding of token positions within lengthy sequences, a key requirement for complex coding tasks.

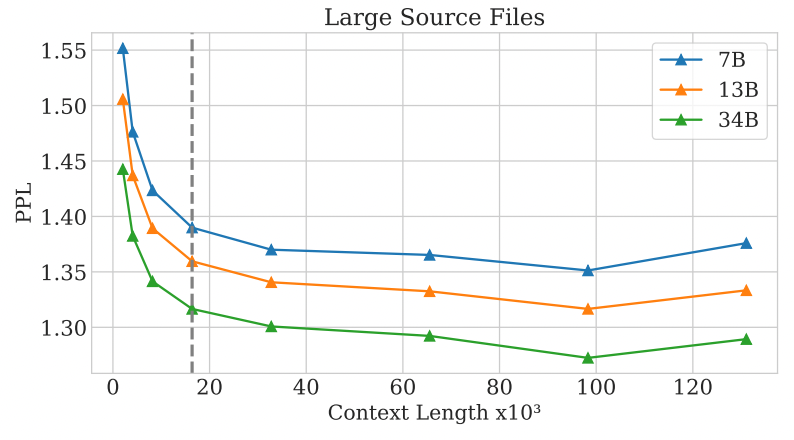

Moreover, RoPE retains the relative positioning of words, ensuring that context is preserved even with extensive input. As a result, Code LLaMa models can manage up to 100,000 tokens effectively.

The Implications of Nine Models

The Code LLaMa lineup includes three foundational models for code generation, each varying in size (7 billion, 13 billion, and 34 billion parameters). Additionally, Meta has introduced models specifically designed for following instructions, which are likely to be the primary choice for developers.

The results have been impressive, with Code LLaMa models outperforming other publicly available text-to-code LLMs, making them a favorable alternative for programmers seeking robust solutions without the cost associated with proprietary models.

The second video titled "EMERGENCY EPISODE: Ex-Google Officer Finally Speaks Out On The Dangers Of AI!" explores the ethical considerations surrounding AI deployment, which is increasingly relevant as these technologies permeate daily life.

Conclusion: A Major Step Forward for AI and Open Source

The launch of Code LLaMa marks a significant triumph for both Meta and the AI community. By open-sourcing these advanced LLMs, Meta is democratizing access to cutting-edge technology, enabling researchers and developers to innovate further.

Meta's commitment to safety and ethical considerations is commendable, particularly as AI becomes more integrated into our lives. The Code LLaMa family exemplifies the ideal balance of performance, applicability, and ethical responsibility in modern AI research.